Failover clustering provides failure transparency. Although the inventory database is unavailable for approximately 10-15 seconds during a failover, the database comes back online within the same instance name. The fi rst step in the failover process is to restart SQL Server on the same node. The instance is restarted on the same node because the cluster fi rst assumes that a transient error caused the health check to fail. If the restart does not respond immediately, the SQL Server group fails over to another node in the cluster (the secondary node). The network name of the server running SQL Server is unregistered from DNS. The SQL Server IP address is bound to the network interface card (NIC) on the secondary node. The disks associated to the SQL Server instance are mounted on the secondary node. After the IP address is bound to the NIC on the secondary node, the network name of the SQL Server instance is registered into DNS. After the network name and disks are online, the SQL Server service is started. After the SQL Server service is started, SQL

Server Agent and Full Text indexing are started. Failover clustering provides automatic failover without affecting transaction performance. Failover clustering provides failure transparency, has a wait of 10-15 seconds on average for a failover to occur. In addition, a cluster failover requires a restart of the Microsoft SQL Server instance. This restart causes all caches to start empty and affects performance until the cache is repopulated.

Database mirroring can fail over to the mirror automatically. However, transactions have to be committed on both the principal and mirror, which affects the performance of applications. Although database mirroring can fail over to the mirror automatically, the mirror database is on a separate instance. During the failover, the server name to reach the inventory database changes and breaks the drop shipping service until connections can be moved and recreated. Database mirroring configured in High Availability operating mode provides automatic detection and automatic failover. Failover generally occurs within 1-3 seconds. By using the capabilities of the Microsoft Data Access Components (MDAC) libraries that ship with Microsoft Visual Studio 2005 and later, applications can use the transparent client redirect capabilities to mask any failures from users. Database mirroring also contains technology that enables the cache on the mirror to be maintained in a semi-hot state so that application performance is not appreciably affected during a failover.

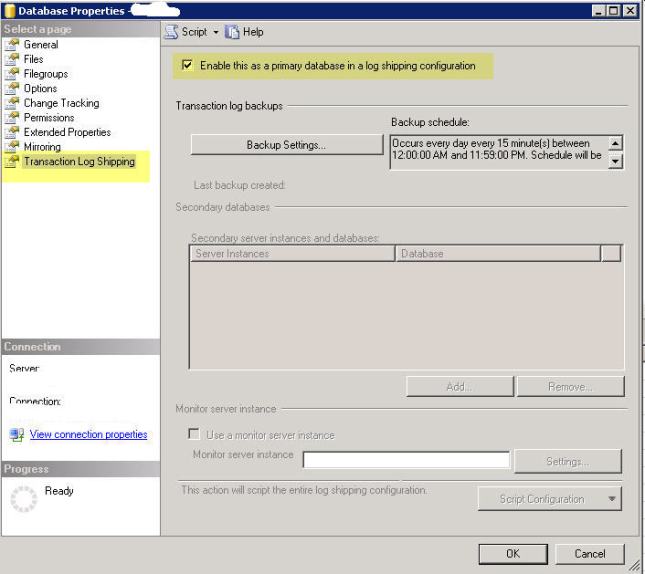

Replication and log shipping require manual detection as well as manual failover. Users cannot be connected to a database when it is being restored in Log shipping mode

Important

Important